What is Deep Learning AF: how does Canon's AI-powered autofocus work?

Deep Learning AF is powering the Canon EOS-1D X Mark III's incredible autofocus – but what *is* deep learning?

Canon has made a lot of noise about its new Deep Learning AF system, which sits at the heart of the manufacturer's latest flagship professional camera. It sounds incredibly clever, but there are plenty of questions – what is Deep Learning? Who does the teaching? Does the system learn as you shoot? Is it really artificial intelligence in a camera? Does it actually make the autofocus any better?

• See the Digital Camera World A-Z Dictionary of photography jargon

If you've read our Canon EOS-1D X Mark III review, you'll know that the answer to the last question is a resounding yes. As for the answers to the other questions about Deep Learning AF, grab yourself a drink and a snack and read on…

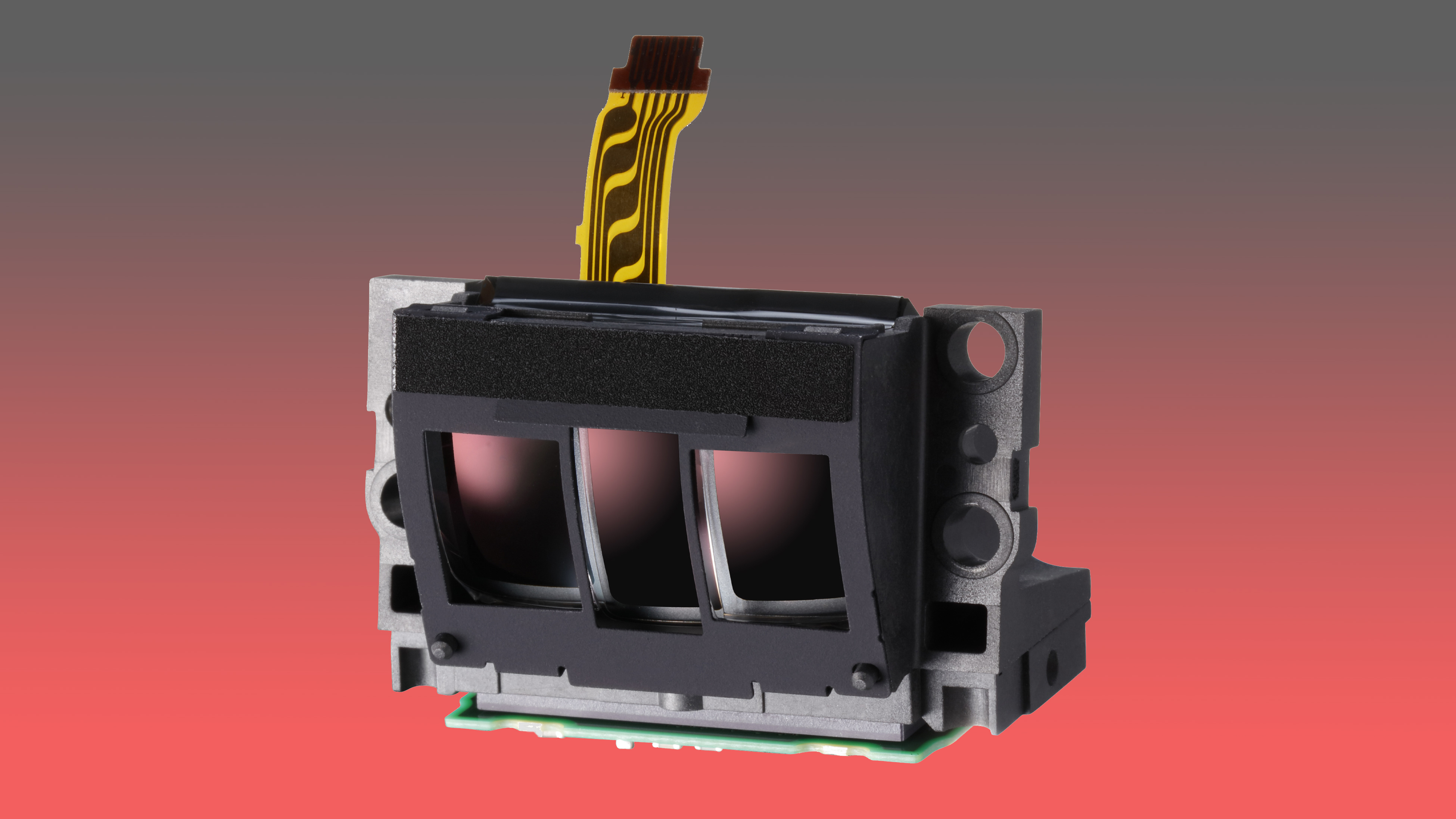

The autofocus mechanics of the Canon EOS-1D X Mark III are incredibly clever, supporting two individual AF systems. First is the optical system, which shoots 16 frames per second through the viewfinder, using a 400,000-pixel metering sensor in conjunction with a dedicated Digic 8 processor, for 191-point AF capable of face tracking.

Then there's the Live View system, able to shoot 20 frames per second, employing all 20.1 million pixels of the image sensor combined with the new Digic X processor, for 3,869 Dual Pixel CMOS points that can perform full eye detect AF.

Powering both of these systems is Canon's core EOS iTR AFX technology – the latest iteration of its Intelligent Tracking and Recognition Auto Focus, which debuted in the original EOS-1D X (and subsequently made its way to the 7D Mark II and 5D family). And buried within its circuitry is the Deep Learning algorithm.

Deep Learning is NOT the same as AI

First of all, it's important to clarify that Deep Learning is not to be confused with artificial intelligence (AI). An AI system is something that is in a ongoing state of development. Deep Learning, or machine learning, is a subset of AI.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

Unlike true AI, Deep Learning is a closed process. It's a pre-assembly algorithm that enables the camera architecture to essentially teach itself, much quicker than it could be manually programmed by human engineers. Once this learning has been completed, it is locked down and loaded into the camera.

From that point, no more learning is possible; despite the name – and Deep Learning is the name of the technology, not a description of the process – the camera is not constantly learning, and will not get 'better' the more you shoot (indeed, a true AI system would learn as many of your bad habits as it would your good ones!).

"It’s been taught," explains Mike Burnhill, technical support manager for Canon Europe. "You put it into a computer, it creates the algorithm that’s then loaded into the camera. So it’s different from AI – AI is a continual learning; deep learning is basically, it teaches itself, and gives you an end result that is then loaded into the camera."

Which begs the question: with so many companies shouting about AI-based features, is a camera actually capable of supporting artificial intelligence?

"The processing power to do true AI is not feasible in a camera," says Burnhill. "If you want to do that, there are phones – but the data’s not in your phone, it’s in Silicon Valley. That’s where the AI system is. It’s just, your phone connection’s connecting to it – it’s not here, it’s there [in the cloud], because you need a server. We could do a camera, but you’d be lugging a giant flight case around with you all the time."

How does Deep Learning teach itself?

So, the Deep Learning algorithm teaches itself – but where does it actually learn from? The answer, put simply, is 'from the best'.

"Canon worked with our agencies," Burnhill tells us. "We received basically access to their whole image database of sports photography, from all the major agencies, we worked with our ambassadors who shoot sports, and they provided their images of different subjects, and it allowed us to teach this AF system how to recognize people in sports."

Sports, obviously, is the targeted teaching method because the Canon EOS-1D X Mark III is primarily a sports camera. The problem is, whether it's a basketball player facing away from the camera, a skiier wearing goggles, or a Formula One driver wearing a helmet, people in sports often have their faces obscured – which means that traditional face or even eye detection AF doesn't work, and the camera will instead lock on to things like the numbers on a player's uniform.

By giving the Deep Learning algorithm access to a vast library of images, of everything from upside-down gymnasts to hockey players wearing pads and helmets, it is able to learn and differentiate the human form in an endless variety of situations – and is ultimately able to perform this 'head detection', so that even if the person's face is not visible, the head is always the primary point of focus.

"Deep learning is basically there’s images, you create a set of rules for it to learn by, and then off it goes and it creates its own algorithm based," Burnhill continues. "So you set the parameters of what the person would look like, you go, 'Here is the person,' then it analyzes all the images of people and says, ‘This is a person’, ‘That is a person’. It goes through millions of images over a period of time and creates that database, and it learns by itself."

In fact, the algorithm actually creates two databases – one to service the optical viewfinder AF system and metering, using Digic 8, and one to service the Live View AF system that uses Digic X. Since it's the Digic X that does all the computation for head tracking, once the AF algorithm detects a person in the frame, everything is pushed over to the new processor.

"Once you’ve got a person in, you’ve actually got dual processing going on," says Burnhill. "There’s two databases here, because the input from both sensors is going to be slightly different, so how it’s recognized will be slightly different, so these are subsets of the same algorithm. The core data for both of them is the same, it’s just how it will be recognized and the right data applied to it."

If it can't learn new things… what about animal AF?

Of course, the Canon EOS-1D X Mark III isn't just a sports camera – its other key audience is wildlife shooters. Yet the camera doesn't possess animal autofocus capability, and we've established that Deep Learning can't actually learn any new tricks once it has been baked into the camera. So is that it? With all this fancy new tech, will the camera not even focus on the family dog?

It's true that, right now, the camera doesn't feature animal (or animal eye) AF. "Basically we’re concentrating on people to start off with to get that kind of algorithm working first," responds Burnhill. "That’s why we’ve kind of focused on sport, because that is a set parameter and we can teach it in a certain period of time,"

The answer, then, lies in firmware. Burnhill confirmed that there is the potential for the camera to undergo more Deep Learning, for things like birds and wildlife, and for this updated algorithm to be disseminated to users via firmware updates – though there are no concrete plans to announce.

"We’ll be developing it all the time, so at the moment it’s still undecided how and where we go. But the development team is going and looking at other animal photography – we realize there’s a whole host of fields, but obviously the big focus of this camera is sport and then wildlife, and obviously with Tokyo 2020 this was the priority."

It's a fair point; if Canon waited for Deep Learning to learn everything, it would have taken longer for the camera to be released. And although manufacturers like Sony boast some selective animal AF in their cameras, Burnhill notes that Canon would much rather release a complete animal AF solution rather than a selective, piecemeal one. And this is where Deep Learning will become invaluable.

"The trouble is with wildlife, there are lots of different animals – you obviously have predators with the eyes at the front, and then you have rabbits' [eyes] at the side, you have snakes, you have birds… there’s no system that recognizes the faces of all animals. And that’s where you get into this whole Deep Learning, of teaching the system to recognize these complex things."

So, while your Sony may be able to track your dog or your cat, but not a salamander or a flamingo, Canon wants to produce a camera that does all or nothing. "If we were going to do it, we’d want to do it for as wide a spectrum – we don’t want to make a dog-friendly camera and a cat-friendly camera, we want to make an animal-friendly camera that works for the wide range of animals that [professionals] would shoot."

Read more:

Canon EOS-1D X Mark III review

How did Canon make the fastest DSLR ever? By redesigning the mirror box

102 updates on the Canon EOS-1D X Mark III

James has 22 years experience as a journalist, serving as editor of Digital Camera World for 6 of them. He started working in the photography industry in 2014, product testing and shooting ad campaigns for Olympus, as well as clients like Aston Martin Racing, Elinchrom and L'Oréal. An Olympus / OM System, Canon and Hasselblad shooter, he has a wealth of knowledge on cameras of all makes – and he loves instant cameras, too.