What is pixel binning? Everything you need to know about this camera tech

Want a 100+ megapixel camera in a super-slim smartphone? No problem, thanks to the wonders of pixel binning

Have you seen the term 'pixel binning' mentioned casually in our camera reviews and wondered what this rather cryptic label actually means? We're here to shed some light on this commonly used process in digital imaging, especially in recent and current Android camera phones.

What is pixel binning?

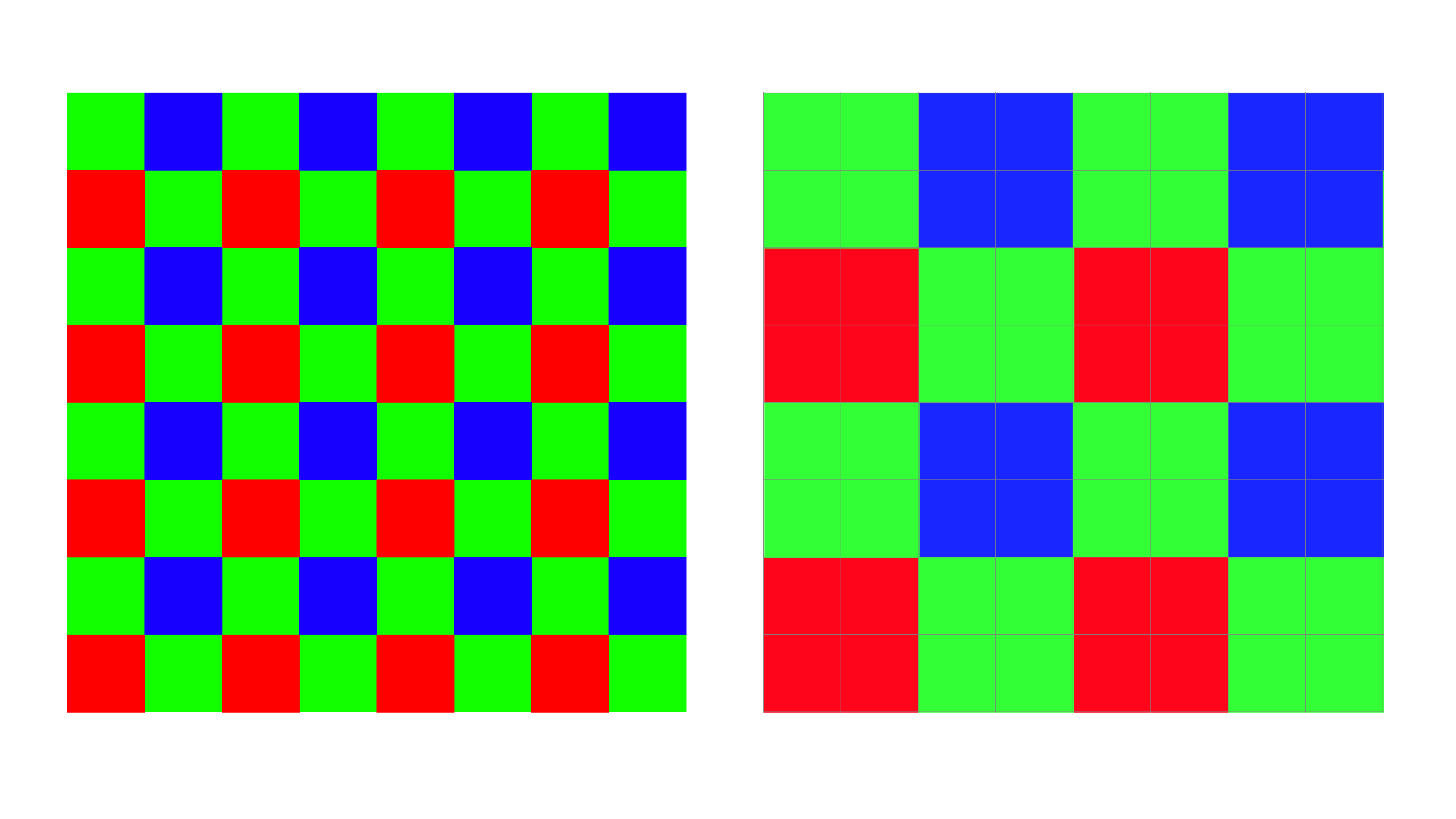

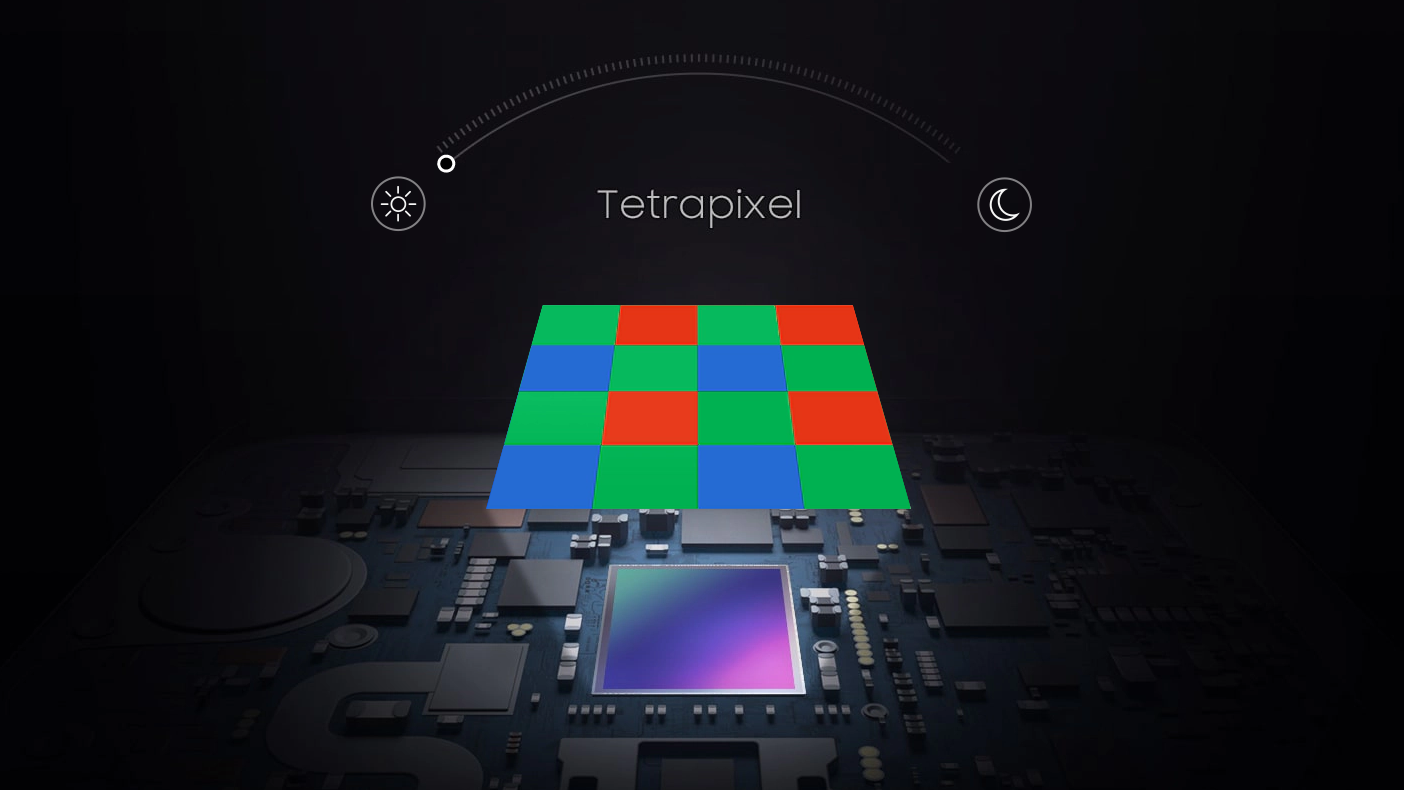

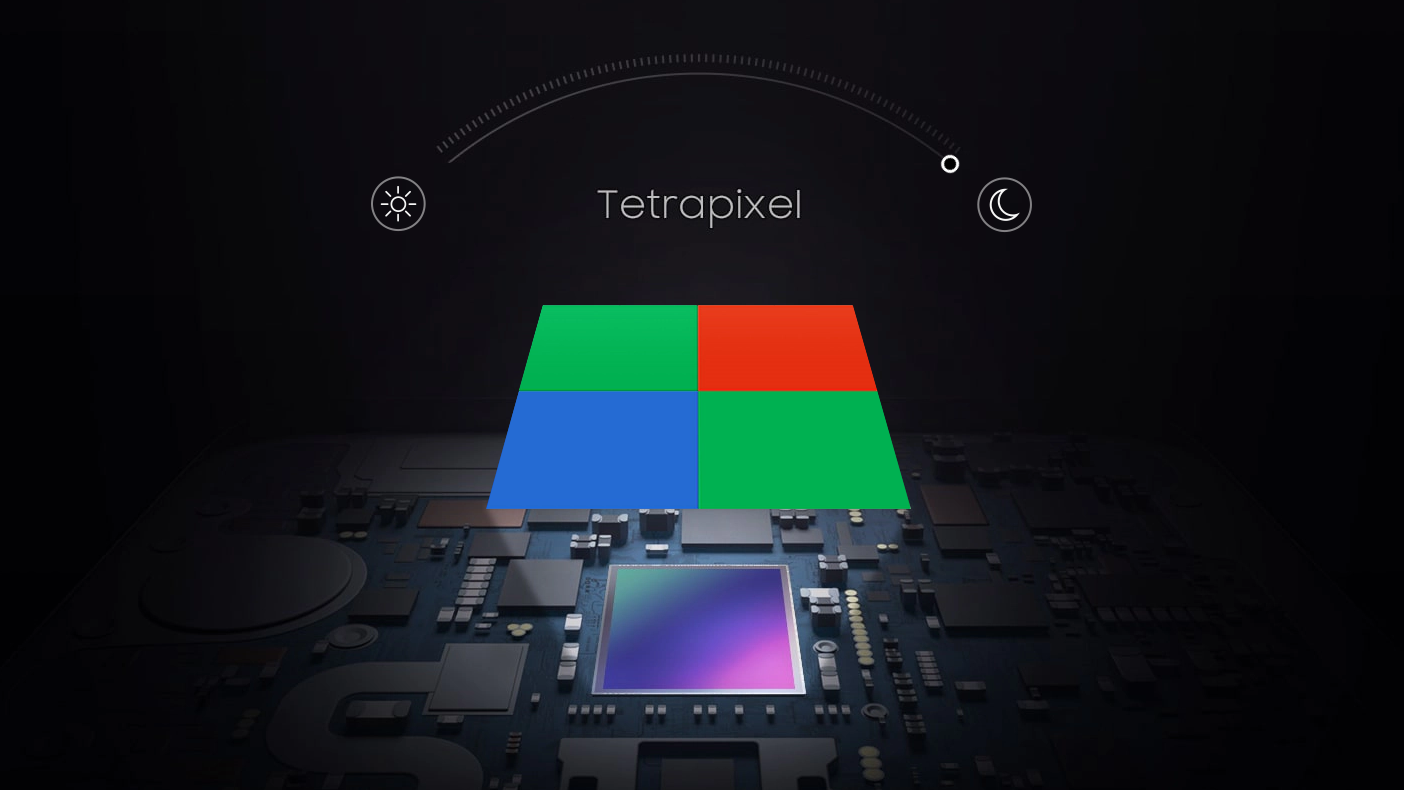

The term 'pixel binning' means taking a group of four adjacent 'pixels' (arranged in a 2 x 2 quad) on a camera's image sensor and treating them as one big 'super' pixel.

This is done to improve image quality and reduce image noise (grain). It explains why your 64MP or 108MP camera phone almost always actually outputs 16MP or 27MP images, respectively, as if you combine four pixels into one, you end up with an image that's one-quarter the size.

What to know more? Read on...

Pixel binning, in depth...

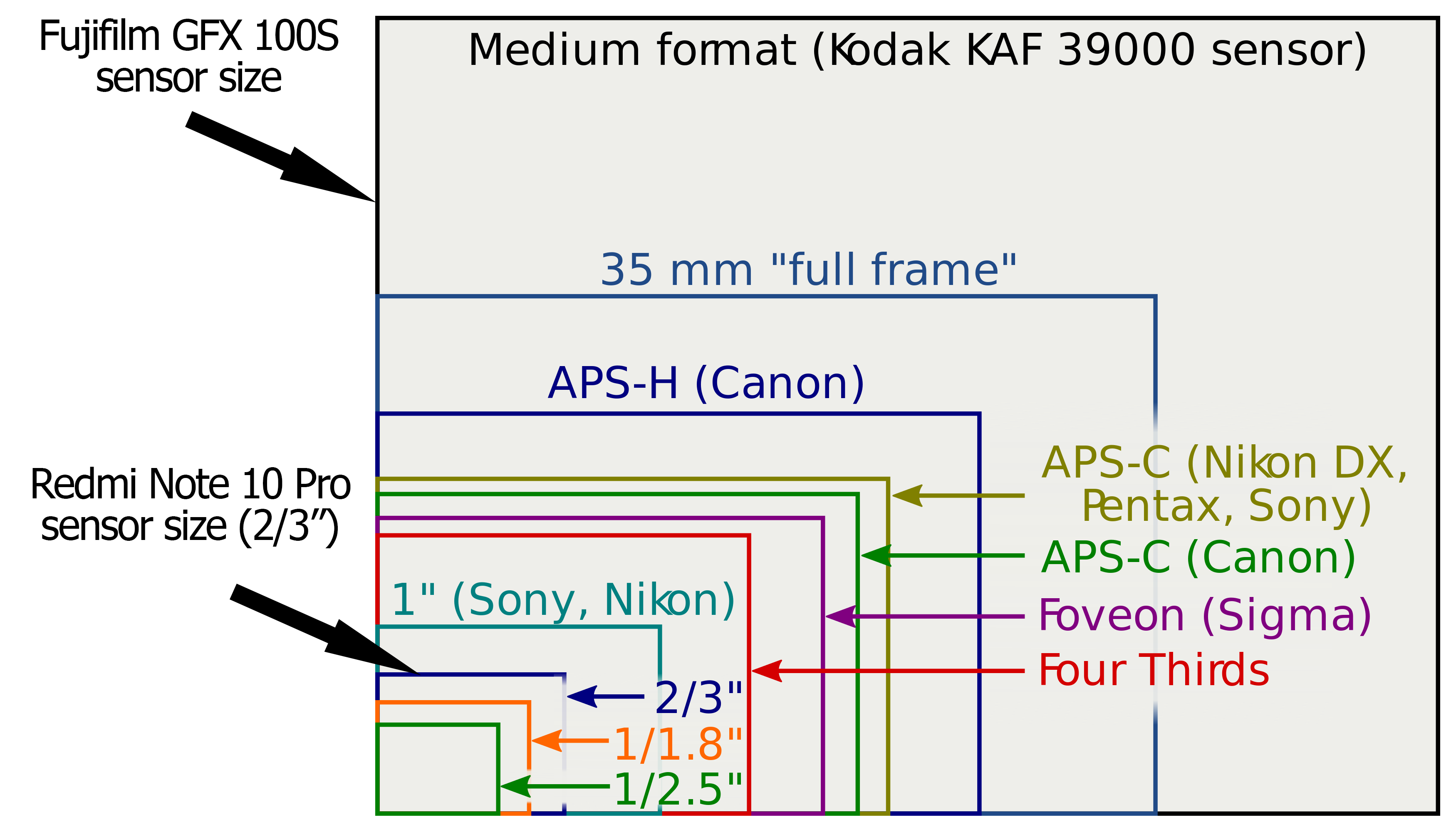

No sooner have phone cameras decimated the point-and-shoot compact camera sector, we see them snapping at the heels of large-sensor interchangeable lens cameras. With even budget phones like the Xiaomi Redmi Note 10 Pro packing a 108MP primary image sensor in a phone costing around $200, you could easily wonder who in their right mind would drop $6,000 on a 102MP Fujifilm GFX 100S - a camera the size of a brick, almost as heavy, and which doesn't even come with a lens for that price.

But as with so many things that seem too good to be true, there is a catch. Super-high res phone cameras employ a method of image processing known as 'pixel binning'. This is a slightly confusing name though, as we're not really dealing with pixels here. Pixels - aside from being a critically panned Hollywood movie - are strictly speaking the smallest individual element of a digital picture (excluding vector graphics) or an LCD display screen.

Though we talk about megapixels in the context of cameras, this really only applies to the digital images generated by the camera sensor - not the sensor itself. The sensor is made up of millions of light-sensitive photosites, not pixels. However, 'megaphotosite' doesn't quite roll off the tongue, does it? Anyway, semantics aside, the primary role of these millions of photosites that make up the surface of a camera sensor is to gather as much light as possible in the shortest span of time. Make the size of each photosite bigger and it can suck in more light over a given time-span - good news for keeping shutter speeds fast and therefore minimizing the risk of a blurred photo due to camera shake.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

This is why cameras such as the full-frame Sony A7S III (S for light Sensitivity) has a seemingly pathetic 12.1MP sensor resolution, but with 'only' 12.1 million photosites spread over a huge 36 x 24mm sensor area means each photosite is physically huge. The camera can therefore gather more light in any given time-frame and consequently produces less ugly image noise (grain, etc) as a result. This is particularly beneficial when shooting pictures in dim lighting, where the ambient light levels are low, so you need your camera's sensor to be as light-sensitive as possible to compensate.

Trouble is, camera/phone manufacturers - or specifically, their marketing departments - just LOVE megapixels. More megapixels sell cameras, especially camera phones, where your average user associates a higher megapixel camera as simply being better than a lower megapixel rival. The logical answer is to cram as many megapixels onto a sensor as possible, and that's exactly what we see with phones like the Redmi Note 10 Pro, and numerous others.

While this is great for producing huge, theoretically detailed images, it's bad news for image quality. In order to fit an image sensor into a wafer-thin smart phone, it has to be small, which in turn means all those 108 million photosites have to be unimaginatively, microscopically, minuscule. This severely limits their light sensitivity, and in turn means the camera's image processing computer is fed with weak image signal data and it has to do more guesswork to render the final image you see. This 'guesswork' shows up in the form of image noise - grain, unwanted color speckling, etc.

So what's the answer? Sensor photosites need to be big so you get good image clarity, but everyone wants 50+ megapixel cameras and physically huge, super-high-res digital images. Enter the answer to everyone's prayers: pixel binning!

At its most basic, this simply means grouping multiple adjacent pixels into one 'super' pixel. The usual method is to take four adjacent pixels in a 2 x 2 array and treat them as a single pixel with four times the light sensitivity. The downside is while light sensitivity and therefore image clarity theoretically gets four times better, by having one quarter the amount of photosites to play with, your images end up being one quarter the size, so a 108MP image becomes a 27MP image.

And that's the crucial difference with the 108MP phone camera versus the $6,000 102MP Fujifilm GFX 100S - the GFX doesn't just have a lot of megapixels, it also has an absolutely massive image sensor, so it can actually use all those 102 million photosites without compromising image quality. Consequently, the size of the camera has to also be big to house such a large image sensor. You simply can't have a giant megapixel count on a sensor small enough to fit in a phone without some compromise, and that compromise is pixel binning.

While a phone camera with a 50MP, 64MP or 108MP sensor can likely shoot images at its full resolution, chances are it won't by default. And even if you do manually select that balls-to-the-wall max res mode, you may well be disappointed with the results, as when the camera can only rely on its individual pixels working alone, you then see the full extent of the reduced light sensitivity and resulting high image noise levels. That's not to say shooting at max res will always produce disappointing results - if light levels are good and you have a very steady hand, it is possible to get super-sharp full-res, non pixel-binned images.

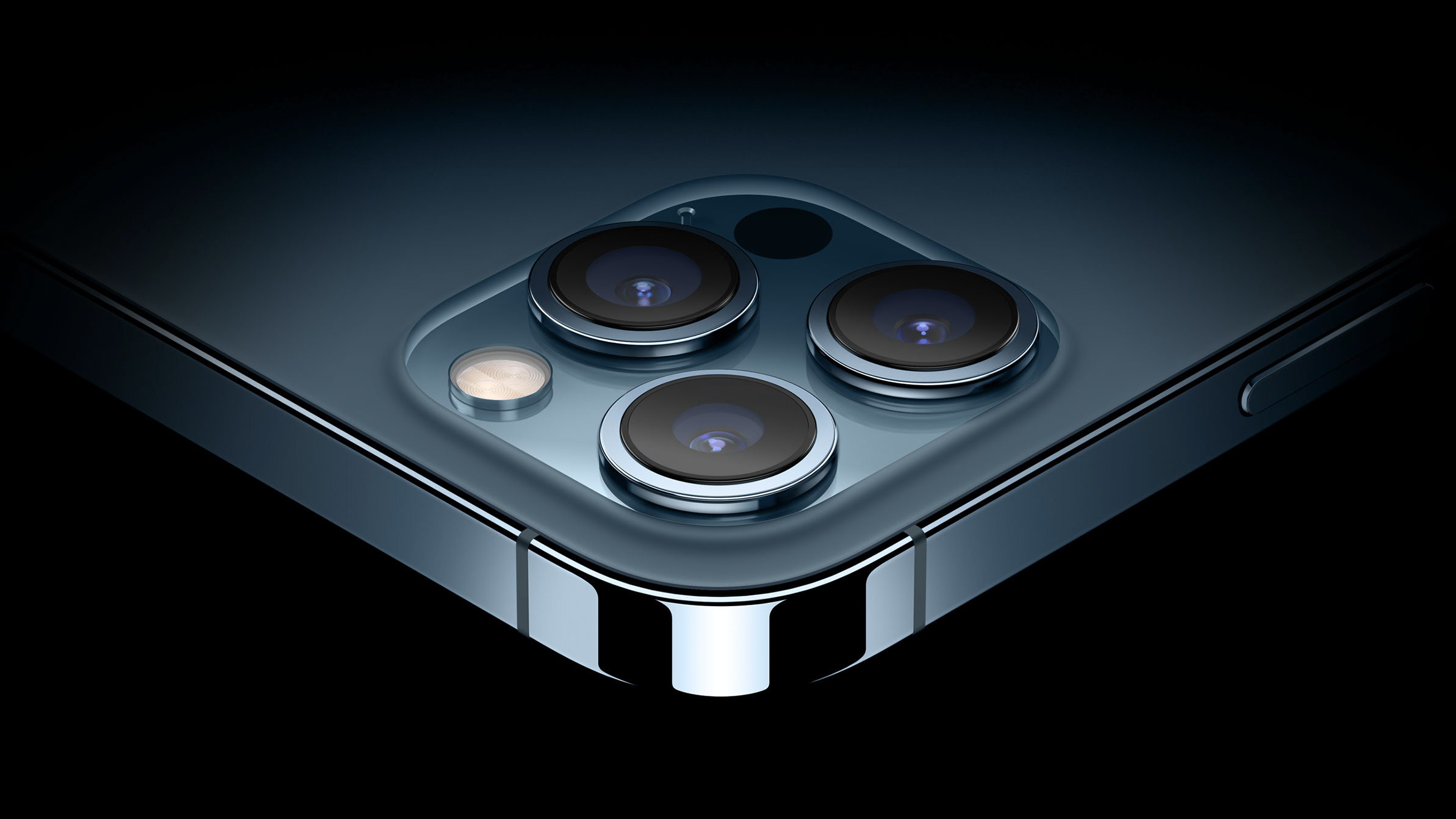

Not all phone manufacturers employ this marketing trickery and try to cram in a 50+ megapixel camera sensors into their phones. Apple has the market share and brand loyalty to play it straight and avoid pixel binning by using a 12MP camera in its iPhone 12 Pro that outputs 12MP images - no nasty size-reduction surprises. This also means any other cameras in the phone, like ultrawide or telephoto snappers, can also be 12MP each.

Consequently, when you switch to one of the extra cameras, you don't see a noticeable difference or deterioration in image quality/size, as can often be the case with phones which supplement their 50+ MP primary camera with much lower megapixel ultrawide/telephoto cameras which don't employ pixel binning to compensate for their image quality shortcomings.

In conclusion, on the one hand pixel binning gives you the best of both worlds - an ultra-high resolution sensor which can also perform well in low light. Conversely, when most of us only view our phone snaps on a tiny phone screen, do we really need 100+ MP phone camera images? This is especially pertinent when you remember most high-res camera phones shoot pixel-binned, quarter-size images by default, in which case you could consider pixel binning as a 'solution' to a problem which needn't even exist in the first place.

Read more:

The best iPhone for photography

The 12 highest resolution cameras you can buy today

Ben is the Imaging Labs manager, responsible for all the testing on Digital Camera World and across the entire photography portfolio at Future. Whether he's in the lab testing the sharpness of new lenses, the resolution of the latest image sensors, the zoom range of monster bridge cameras or even the latest camera phones, Ben is our go-to guy for technical insight. He's also the team's man-at-arms when it comes to camera bags, filters, memory cards, and all manner of camera accessories – his lab is a bit like the Batcave of photography! With years of experience trialling and testing kit, he's a human encyclopedia of benchmarks when it comes to recommending the best buys.