AI can now create photo-realistic police sketches – and there's a BIG problem

New forensic AI software uses DALL•E 2 to create photo-realistic police sketches – but there's a huge problem with it

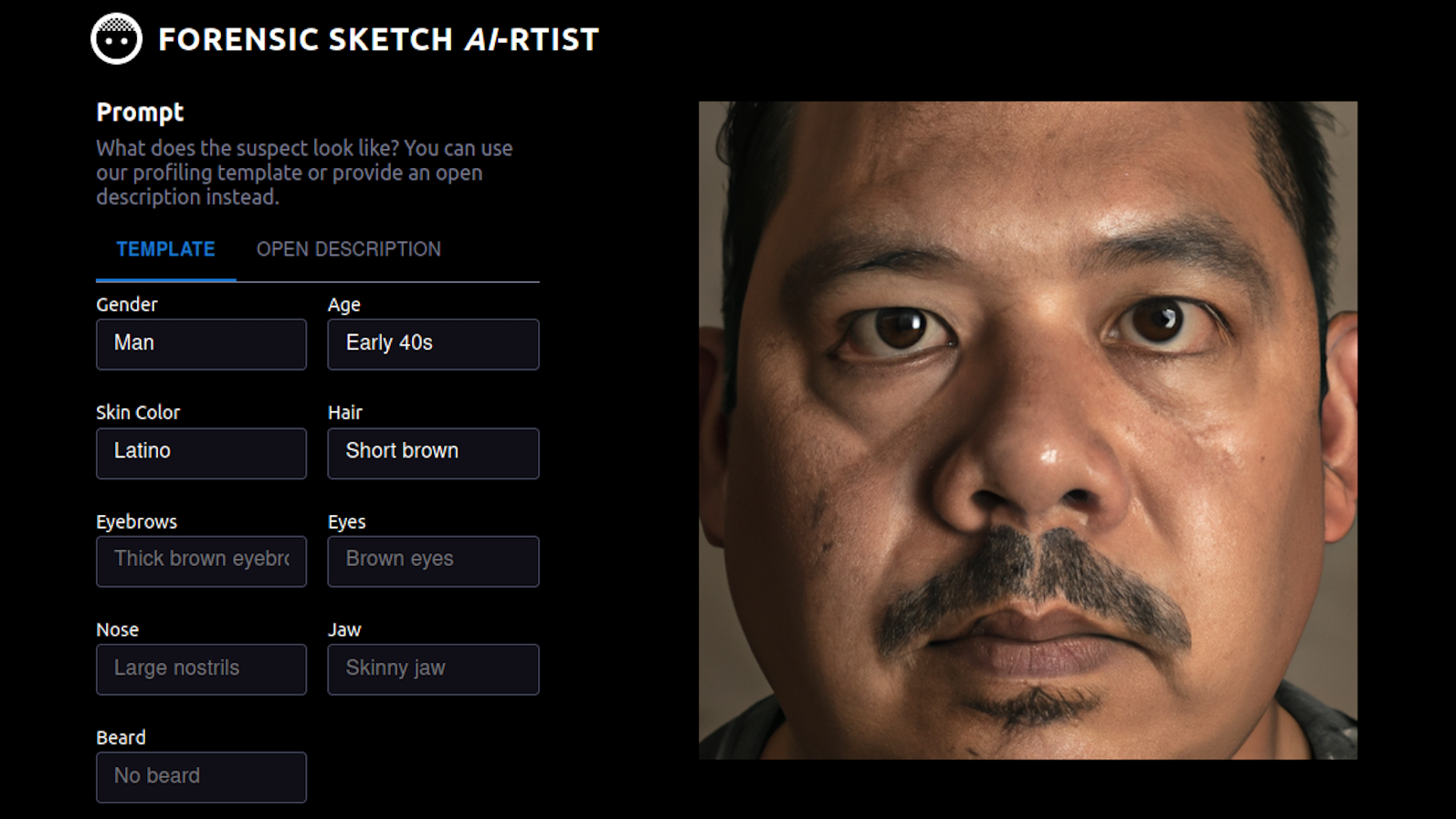

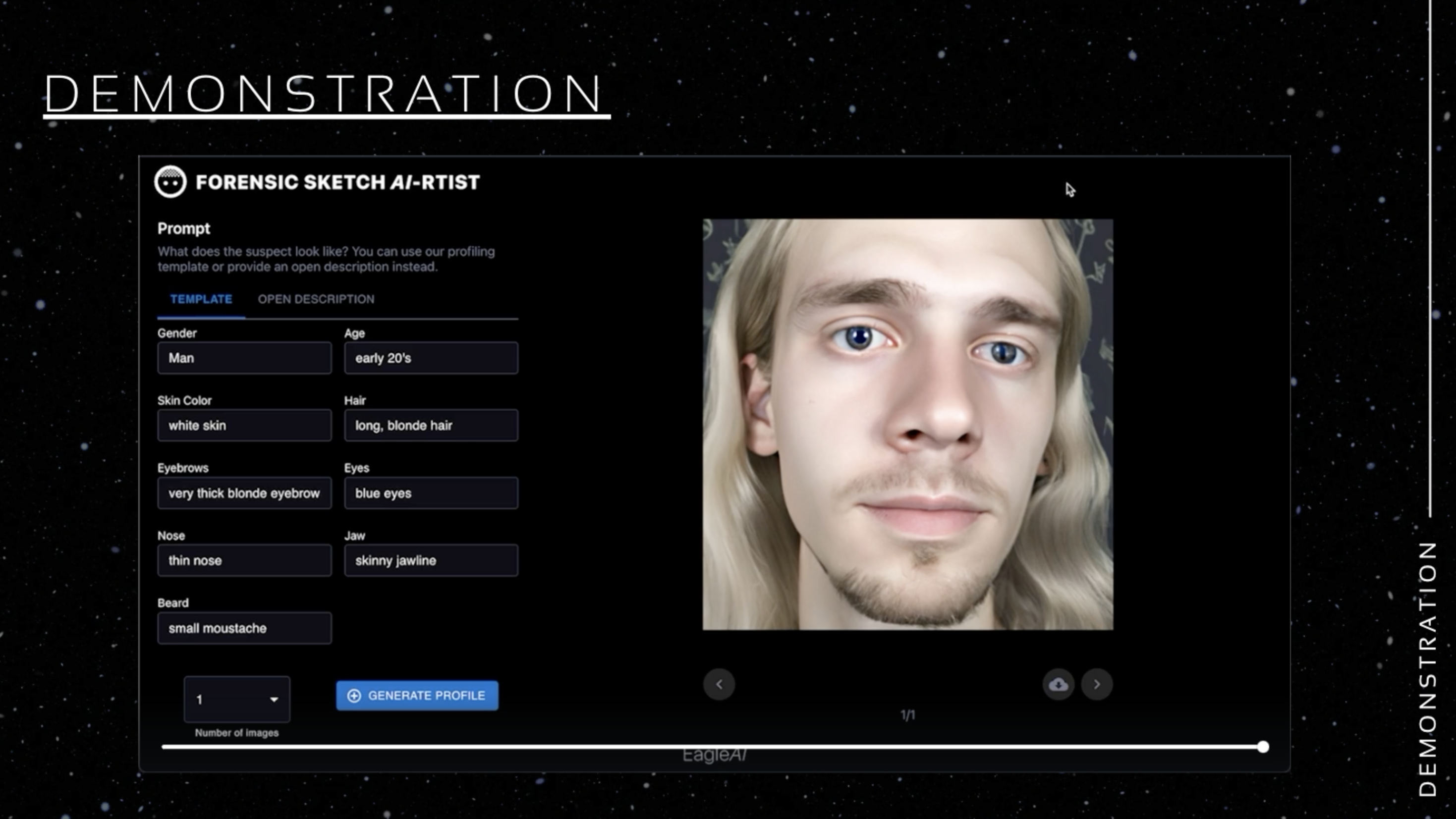

AI is becoming more problematic by the minute. Is there anything that it can't create? The latest software causing a stir is the Forensic Sketch AI-rtist program, which can generate photo-realistic police sketches of potential suspects in an attempt to cut down the time it would usually take a sketch artist to draw.

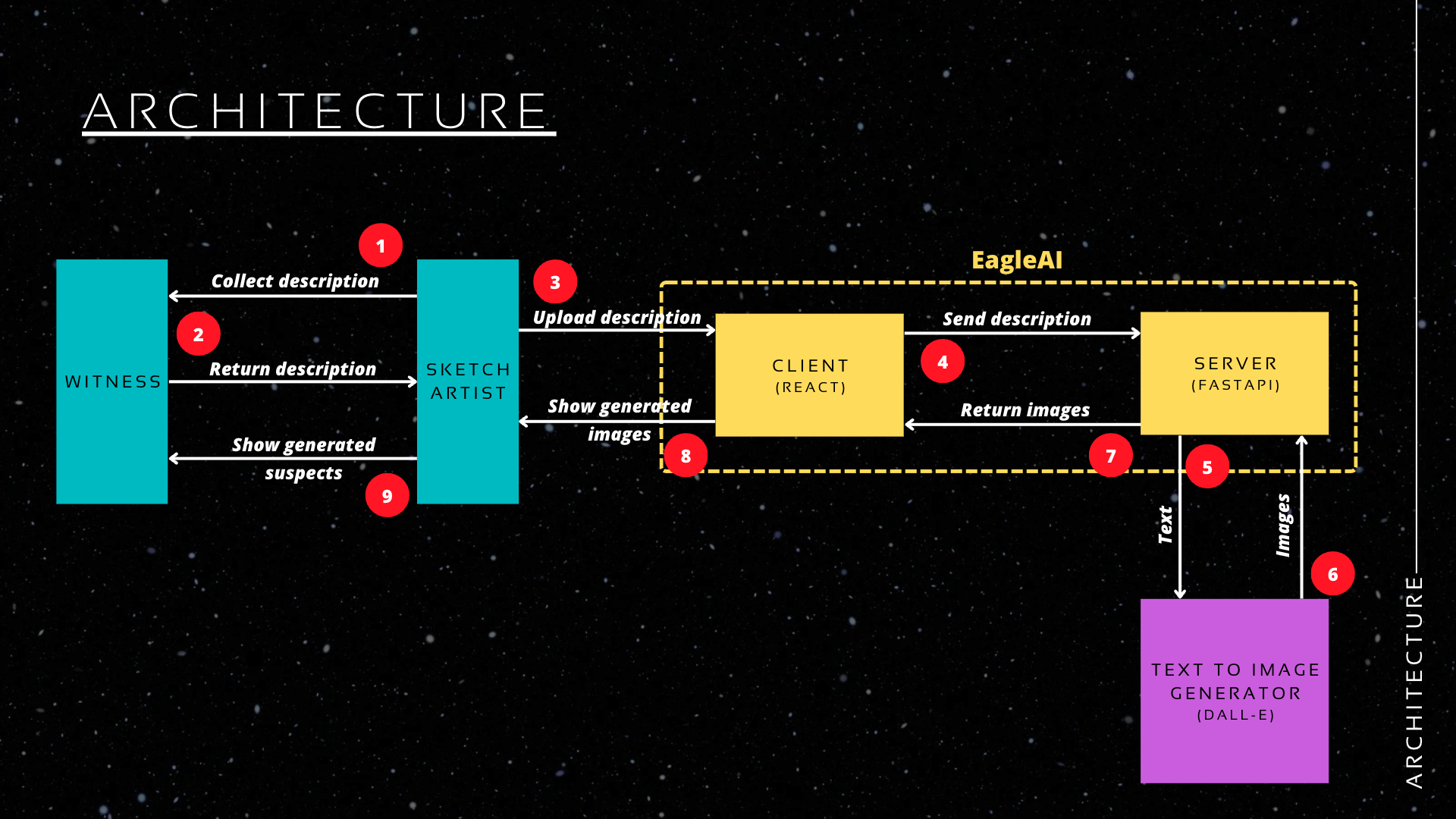

The program was created back in December 2022 by two developers, who call themselves EagleAI, using the existing OpenAI DALL•E 2 image generation model to create a realistic image from a text prompt – or in this case, a witness description.

EagleAI is the partnership of cloud infrastructure engineer, Artur Fortunato, and software engineer, Filipe Reynaud. And in creating the Forensic Sketch AI-rtist program the duo aimed to solve what they identified as a problem area in how long it takes a forensic sketch artist to conjure up a drawing.

The team discovered that it would typically take around two to three hours for a sketch artist to complete their work, due to the artist and witness needing to be in the same location and the traditional pen and paper method of creation.

Forensic Sketch AI-rtist speeds up this process by using artificial intelligence to generate an image from a text description, then enabling the sketch artist to make corrections from the victim or witness' feedback. Simple solution, right?

In an informative presentation uploaded to canva, and narrated by an AI voice named Nate, EagleAI explains the reasoning for the creation of its program as well as the architecture surrounding how it works. While it may seem that the software has good intentions and practical real-world uses, there's something extremely problematic about this program.

The issue that many people are facing with these new AI tools and programs is not just the copyright breaching and art-stealing implications, but the embedded bias. AI has on multiple occasions been stereotypical, sexist, racist, and otherwise inappropriate in what it generates – and this essentially boils down to the data sets that AI is trained on, having been scraped from the internet without careful filtering.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

In speaking with Motherboard (Vice), AI ethicists and researchers have shared concerns that the use of generative AI in application to police forensics can be incredibly dangerous, potentially worsening the existing racial and gender biases that often appear in initial witness descriptions provided to law enforcement.

"The problem is that any forensic sketch is already subject to human biases and the frailty of human memory," said surveillance litigation director of the Electronic Frontier Foundation, Jennifer Lynch.

"Research has shown that humans remember faces holistically, not feature-by-feature. A sketch process that relies on individual feature descriptions like this AI program can result in a face that's strikingly different from the perpetrator's.

"Unfortunately, once the witness sees the composite, that image may replace in their minds, their hazy memory of the actual suspect. This is only exacerbated by an AI-generated image that looks more 'real' than a hand-drawn sketch."

Lynch also made the connection to mistaken eyewitnesses and false or misleading forensics contributing to almost 25% of all wrongful convictions across the US, and that Latinos and people of color are mostly the victims of such.

The developers, Fortunato and Reynaud, said in a joint email to Motherboard that their program runs with the assumption that police descriptions are trustworthy and that, "police officers should be the ones responsible for ensuring that a fair and honest sketch is shared.

"Any inconsistencies created by it should be either manually or automatically (by requesting changes) corrected, and the resulting drawing is the work of the artist itself, assisted by EagleAI and the witness."

The AI can generate one, two, or four sketches at once, which should help create a broader picture of a potential suspect, and the developers shared in the online presentation that this program will undergo testing and feedback from beta sketch artists and police precincts to determine its market fit. Still, this is yet another example of the huge inherent issues with AI as an emerging technology – and the potential consequences it poses in terms of ethical law enforcement.

• You may also be interested in the best noise reduction softwares, as well as our review of the Topaz Labs DeNoise AI and Topaz Labs Sharpen AI software, and the Top 10 AI tools in Photoshop.

Beth kicked off her journalistic career as a staff writer here at Digital Camera World, but has since moved over to our sister site Creative Bloq, where she covers all things tech, gaming, photography, and 3D printing. With a degree in Music Journalism and a Master's degree in Photography, Beth knows a thing or two about cameras – and you'll most likely find her photographing local gigs under the alias Bethshootsbands. She also dabbles in cosplay photography, bringing comic book fantasies to life, and uses a Canon 5DS and Sony A7III as her go-to setup.