Google can turn text into realistic images using AI – is it a threat to photographers?

Google is researching a new advanced AI system that can convert text into an image with unprecedented photorealism but it isn't ready to be accessed by the public yet

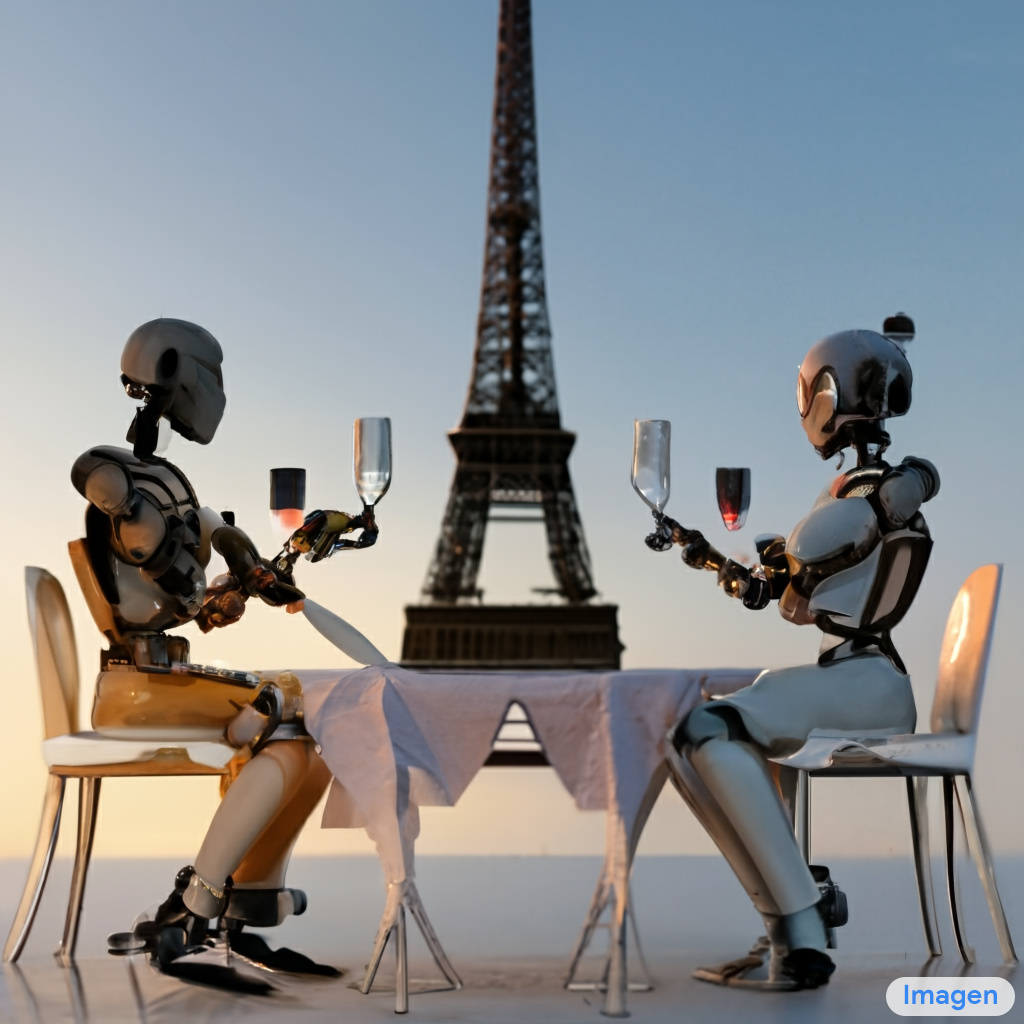

Google's latest research effort delves into an artificially intelligent text-to-image diffusion model that can produce unimaginably real-looking images from a simple phrase, prompt or text description. Imagen is the name of this very cool AI and it could potentially be doing our jobs as photographers for us.

A deep level of language understanding is paired with unprecedented photorealism to create fabricated images via what Google is calling a text-to-image diffusion model. If you can imagine it, chances are Imagen can create it.

Searching for the best photo editing software?

Ever wondered what an alien octopus floating through a portal reading a newspaper might look like? Imagen has already created the image so that you no longer have to. This industry-changing diffusion model could have limitless potential and application into our lives when (eventually) it is made available to the public.

Brought to you by Google Research's Brain Team, the Imagen AI model can curate virtually anything you can think of or type into words, by producing a scarily accurate looking image that could have easily been captured by someone using a smartphone camera or even a DSLR, with added post-production. So should we be worried about this new AI entering the business and stealing our jobs? Not just yet.

While Google is usually very proud and pushy of its latest developments, it has made clear statements that this latest text-to-image diffusion model is in no way ready to be accessed by the public just yet.

Limitations and societal impact are being considered by the team, with several ethical challenges broadly facing text-to-image research that include racial and gender bias as a result of researchers having had to rely heavily on large web-scraped datasets that are mostly uncurated.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

Read more: What is an AI camera? How AI is changing photography?

A statement on Google's Imagen research site suggests that: "Datasets of this nature often reflect social stereotypes, oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity groups. While a subset of our training data was filtered to remove noise and undesirable content, such as pornographic imagery and toxic language, we also utilized LAION-400M dataset which is known to contain a wide range of inappropriate content including racist slurs, and harmful social stereotypes."

The site continues to elaborate that "Imagen encodes several social biases and stereotypes, including an overall bias towards generating images of people with lighter skin tones and a tendency for images portraying different professions to align with Western gender stereotypes... We aim to make progress on several of these open challenges and limitations in future work."

Imagen is also said to exhibit serious limitations when generating images that depict people and human faces, hence why most of the released sample images we have seen so far are of animals or objects.

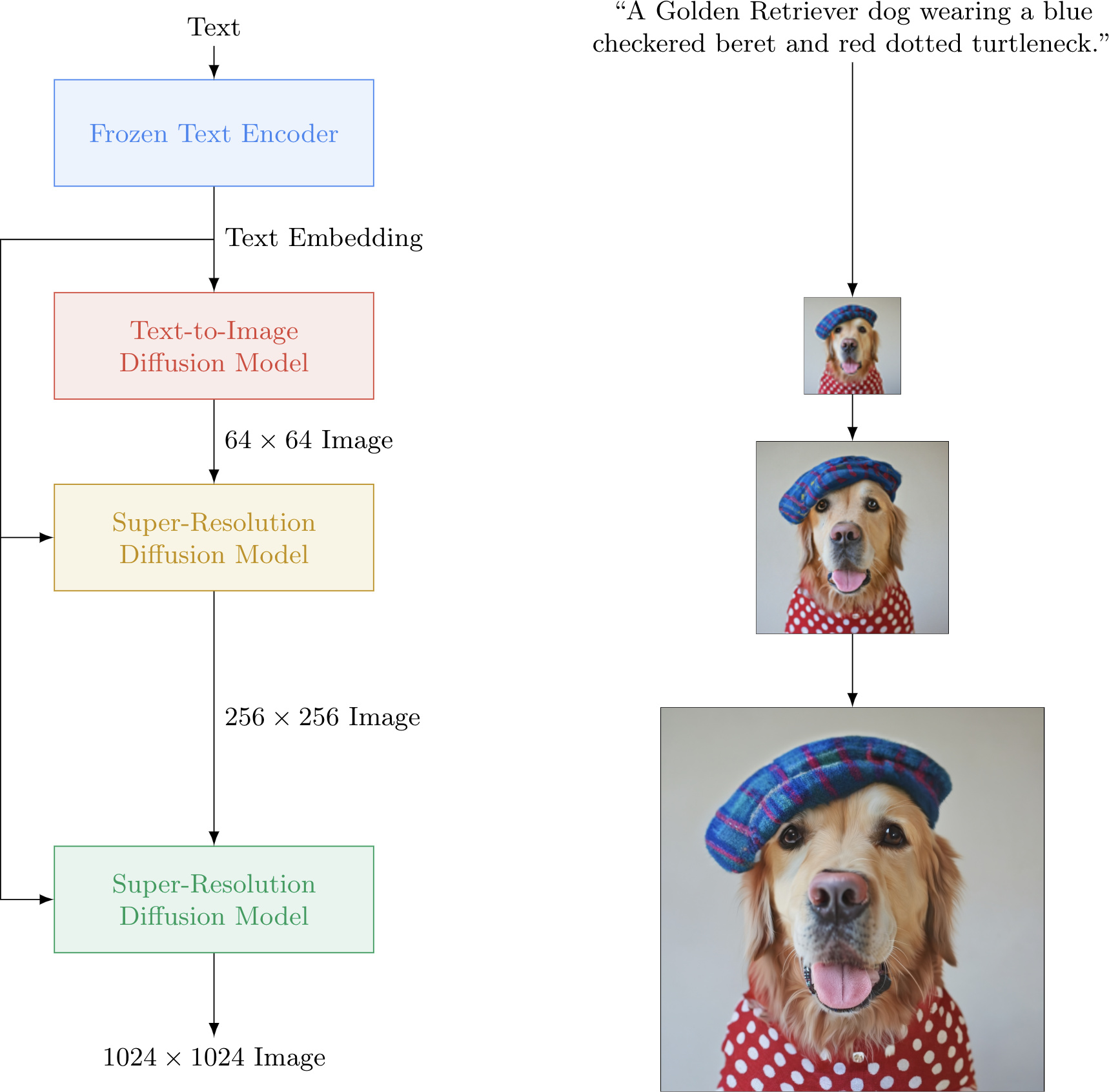

The team working on Imagen have published a full research paper detailing the complete mathematical and technological workings behind this AI, but in short, it uses a large frozen T5-XXL encoder to encode the input text into embeddings. A conditional diffusion model then maps the text embedding into a 64×64 image, which can be further upsampled as Imagen utilizes text-conditional super-resolution diffusion models to create 64×64→256×256 and 256×256→1024×1024 images.

They've even created a handy diagram to explain things a bit better, (see below).

While some images like the octopus one look a little cartoonish and sort of like the subject is made from clay, from a photography perspective most of the example images created by Google Imagen appear to do a fantastic job at employing basic photography techniques such as depth of field, composition, and key focus points.

Having admittedly not read the entire research paper it's unclear in simple terms as to how the AI actually creates these images, and whether it takes samples and snippets from an already existing pool of license-free images to create the unusual prompted ones from the text provided.

As for when we could potentially see the AI being accessible for all, Google suggests via its research site that "There is a risk that Imagen has encoded harmful stereotypes and representations, which guides our decision to not release Imagen for public use without further safeguards in place. In future work we will explore a framework for responsible externalization that balances the value of external auditing with the risks of unrestricted open-access."

It's unclear exactly how Google intends to use the Imagen Diffusion Model, and when it's potential can have worldwide implementation. But for now, it's a pretty cool research avenue, and isn't a threat to photographers just yet until its biased stereotyping and human face rendering can be fixed.

Read more:

Best free photo editing software

Best noise reduction software

AI and AR are the future of visual storytelling, according to Canon

Best tablet for photo editing

Beth kicked off her journalistic career as a staff writer here at Digital Camera World, but has since moved over to our sister site Creative Bloq, where she covers all things tech, gaming, photography, and 3D printing. With a degree in Music Journalism and a Master's degree in Photography, Beth knows a thing or two about cameras – and you'll most likely find her photographing local gigs under the alias Bethshootsbands. She also dabbles in cosplay photography, bringing comic book fantasies to life, and uses a Canon 5DS and Sony A7III as her go-to setup.