Leaked patent suggests Google is working on an incredible new selfie camera

Notch no more! Google could be perfecting a completely invisible under-screen selfie camera

Details have emerged that Google could be devising a new form of under-screen front-facing camera for future Pixel phones. If the recent patent application translates into a successful implementation of the technology, it'd mean a clean, notch-free display.

The best camera phones always have a front-facing camera, which is handy not just for taking selfies, but also for features like face unlock. However, as useful as the selfie camera is, it invariably has a negative impact on screen real estate, and the overall phone aesthetic. Smartphone design is all about maximising the 'screen to body' ratio, with the slimmest possible bezels. Historically that's not quite been possible, as the display has had to make way for the selfie camera, whether that be in the form of a notch, or a punch-hole. Some manufacturers have devised more creative solutions, like the OnePlus 7 Pro which featured a pop-up selfie camera, or the Asus Zenfone 8 Flip that was able to flip its entire rear-facing camera module forward when you wanted to take a selfie.

But mechanical moving parts in a phone are always potential weak points, while also adding extra bulk. The ultimate solution to eliminating the screen notch is an under-display selfie camera, which we've seen recently in phones like the Samsung Galaxy Z Fold 5. It's technically challenging, however, as the display mustn't show any image imperfections as it passes over the camera lens, yet it simultaneously can't obstruct light entering the camera beneath. Something of a 'Catch-22'.

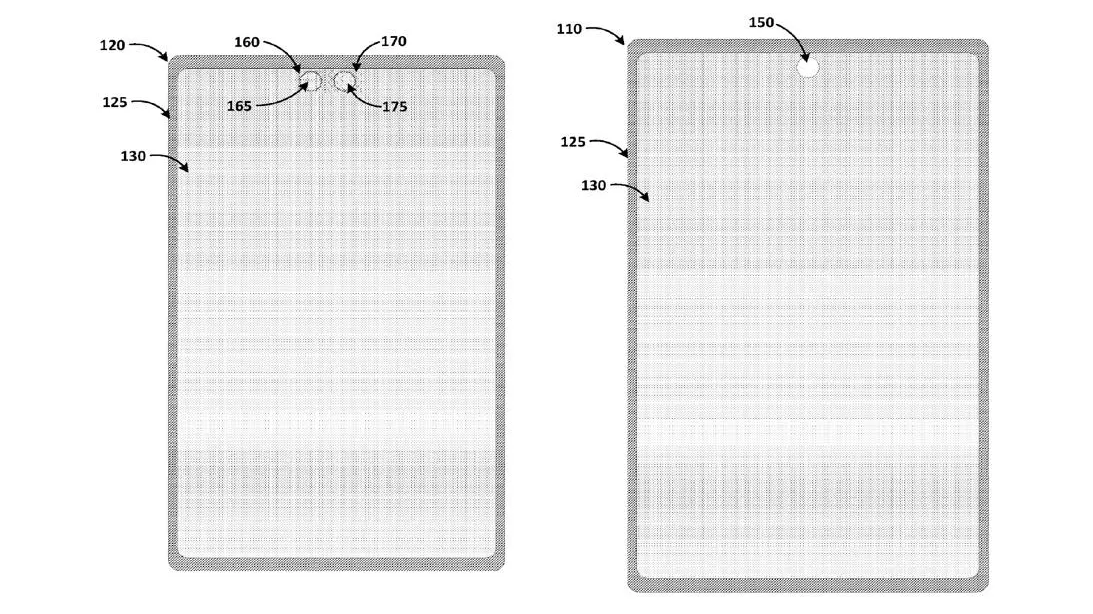

Google's patent shows an innovative take on the under-screen camera. Rather than using a single camera, which can compromise display quality in the area that passes over the lens, Google's design would use a pair of cameras, with each positioned behind two specific regions of the display. Each display region would feature a special material to block light in specific patterns; patterns which correspond to what the camera sensor behind has been tuned to capture. One sensor could then record specific information like sharpness, and the other color or monochrome data, with machine learning then combining these components into a single complete image. By splitting the image into separate elements like this, the display passing over each under-screen camera is presumably less compromised than if it was covering a single camera, thereby potentially reducing the impact on display quality.

It all sounds pretty ambitious, but as this is currently just a patent application, there's no guarantee if, let alone when, the theory may translate into actual hardware. If Google can turn a completely invisible under-screen camera into reality, it'd be quite a feat of engineering.

Story credit: Forbes

Read more:

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

The best Google Pixel phones

The best camera phones

The best burner phones

Which is the best iPhone for photography?

The best budget camera phones

Ben is the Imaging Labs manager, responsible for all the testing on Digital Camera World and across the entire photography portfolio at Future. Whether he's in the lab testing the sharpness of new lenses, the resolution of the latest image sensors, the zoom range of monster bridge cameras or even the latest camera phones, Ben is our go-to guy for technical insight. He's also the team's man-at-arms when it comes to camera bags, filters, memory cards, and all manner of camera accessories – his lab is a bit like the Batcave of photography! With years of experience trialling and testing kit, he's a human encyclopedia of benchmarks when it comes to recommending the best buys.