Panasonic AI will help AI cars understand their own fish eyes!

“Panasonic Holdings aims to contribute to helping customers' lives and work through research and development of AI"

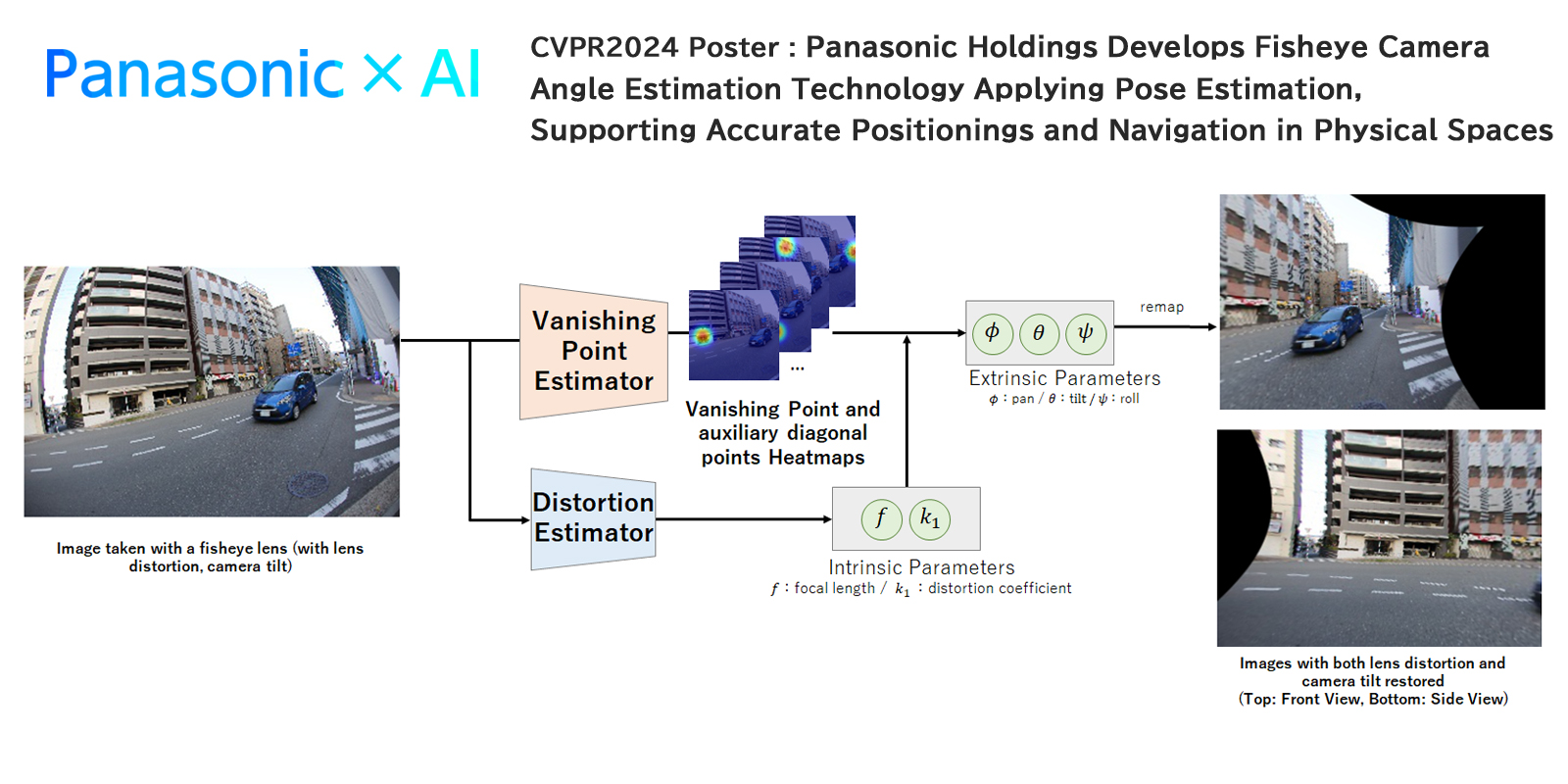

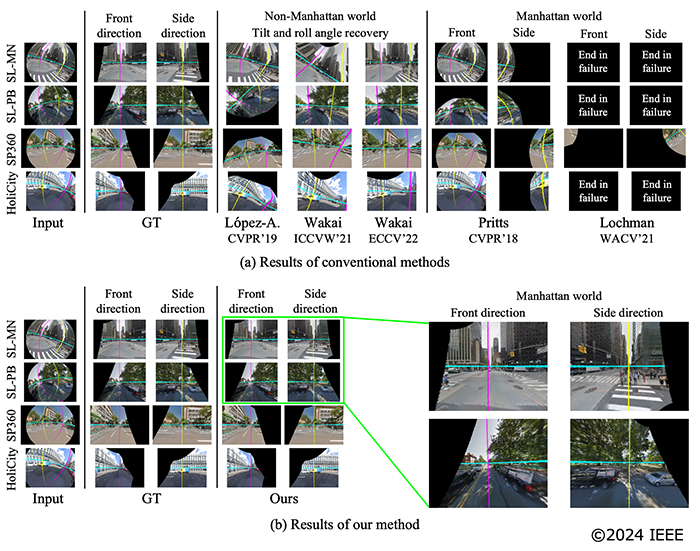

Panasonic Holdings Corporation will soon present a new camera calibration technology using artificial intelligence that estimates accurate and robust camera angles from a fisheye image.

Self-driving cars, drones, and robots all require accurate travel estimation abilities to enable them to position themselves and navigate when moving.

To achieve this, most technologies use specific measurement systems, such as gyroscopes and LiDAR, additionally attached to the cameras. To reduce size, weight, and cost, the technology needs to be able to estimate travel directions using only image capture.

Panasonic Holdings commented:

“Panasonic Holdings aims to contribute to helping customers' lives and work through research and development of AI technology that accelerates social implementation and training of top AI researchers.”

Fisheye lenses are ultra wide-angle lenses that produce strong visual distortion to create a wide panoramic or hemispherical image with an extremely wide angle of view, far beyond any rectilinear lens.

You might not be able to afford one for your camera, but you probably know them from a reversing camera.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

The wide-angle lenses use a special mapping or ‘distortion’ as opposed to producing images with straight lines of perspective, or rectilinear images.

Despite their wide-angle abilities, fisheye lenses can cause the viewer to struggle with angle estimation because of the distortion.

To address this problem, Panasonic Holdings has developed a method designed for functions including broad surveillance and obstacle detection, essentially harnessing the wide angle capabilities of a fisheye lens, but recalibrating the images to remove the distortion and make the images easier for humans to process.

Panasonic Holdings said:

“Specifically, this accurate and robust method based on pose estimation can address drastically distorted images under the so-called “Manhattan world assumption;” the assumption that buildings, roads, and other man-made objects typically are at right angles to each other. Since the method can be calibrated from a single general image of a city scene, it can extend applications to moving bodies, such as cars, drones, and robots.”

The proposed technology will be presented at the main conference of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2024, as a research outcome of the REAL-AI program for developing top human resources in the Panasonic group. The conference will be held in Seattle, Washington from June 17 to 21, 2024.

Take a look at our guide to the best camera fisheye lenses. We've also put together a guide to the best dash cams, and the best budget dash cams.

After graduating from Cardiff University with an Master's Degree in Journalism, Media and Communications Leonie developed a love of photography after taking a year out to travel around the world.

While visiting countries such as Mongolia, Kazakhstan, Bangladesh and Ukraine with her trusty Nikon, Leonie learned how to capture the beauty of these inspiring places, and her photography has accompanied her various freelance travel features.

As well as travel photography Leonie also has a passion for wildlife photography both in the UK and abroad.