Do you believe everything you see on social media? If so, you probably shouldn't. To take just one example, after a global warming protest in London’s Hyde Park in 2019, photos appeared showing the area covered in litter.

What hypocrites, right? Actually no: those photos were actually taken in Mumbai, India. But how would you know that, unless you'd been at the protest, or wanted to spend hours researching the topic?

Mainstream media isn't immune to this problem either. Thanks to staff cuts and a fast-moving news cycle, even esteemed newspaper sites often end up repurposing social media images without properly checking them.

It's an issue the R&D group at the New York Times is tackling head on, through the Content Authenticity Initiative (CAI), whose members also include Adobe, Twitter, Qualcomm and Truepic. And together, they've been developing new technology that will certify a photo in terms of where it was taken, when it was taken, and so forth.

The plan is that when a photo appears online, readers will be able to easily check its provenance, in a similar way to the hypothetical example below:

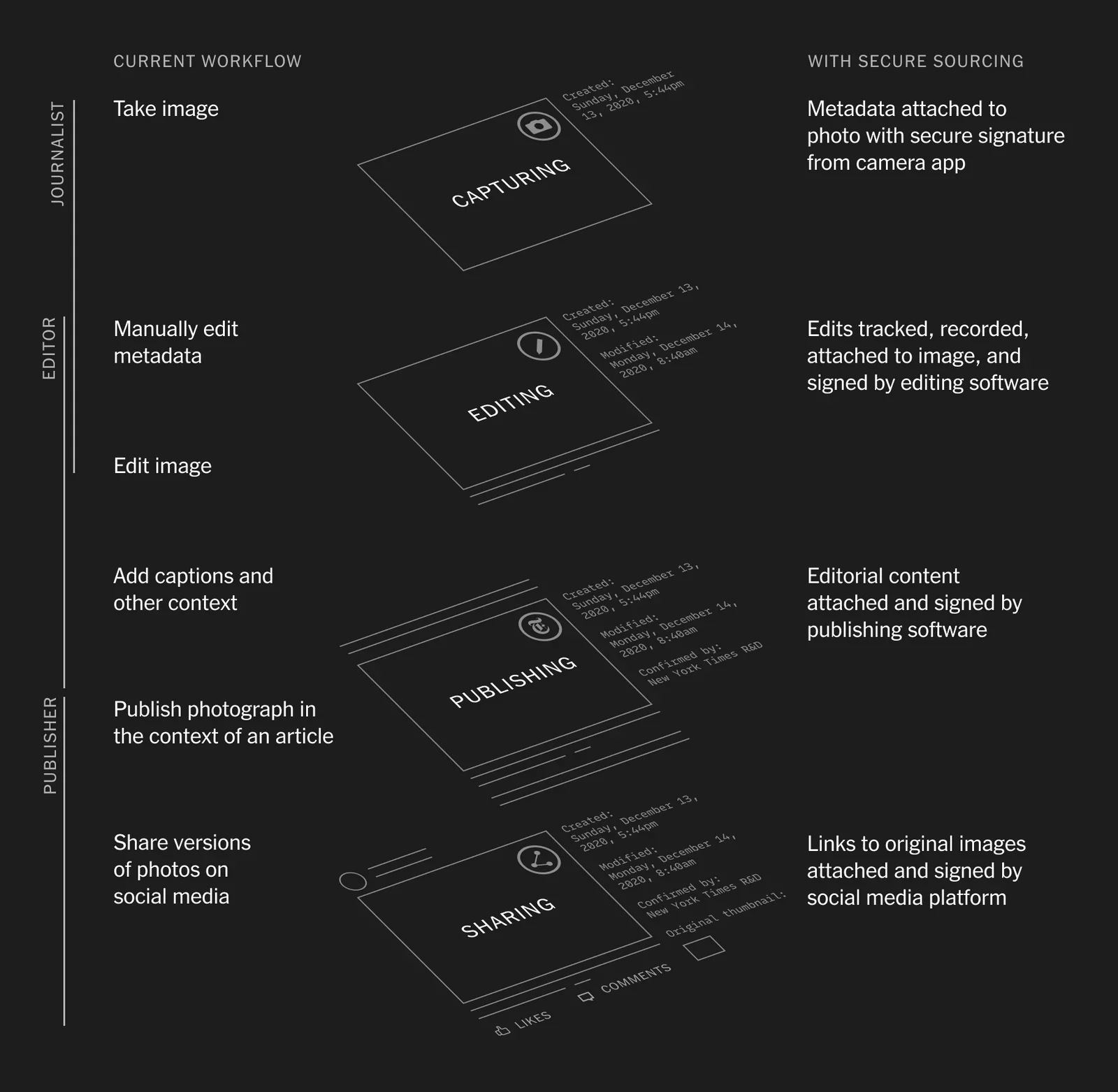

The CAI has now announced a working prototype is up and running, and will be available to photojournalists and editors "soon". This clever tech, which is based on blockchain, will allow photojournalists, editors and publishers to ‘sign' an image at each stage of the process, creating what they call “an end-to-end chain of trust for the photo’s metadata”.

In other words, it’s a bit like the concept of a ‘chain of custody’ in the legal profession. Each photo is embedded with metadata that includes not only when and where the photo was taken, but also what specific edits have been made to it in Photoshop, and the organisation responsible for publication.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

You can see an example of what the editorial process might look like, taken from the NYT R&D site, below. This involves secure capture using a Qualcomm/Truepic test device, editing via Photoshop, and publishing via the newspaper’s Prismic content management system.

It's a very exciting prospect for anyone involved in photography, image editing or the media, but it's also important to point out what this new technology is NOT.

It's not a way for photographers to enforce copyright over their work: as the CAI clearly states on its FAQ page: "The CAI will not be enforcing any permissions around access to the assets themselves."

It's also not an attack on personal privacy. People with unique privacy needs, such as human rights workers in authoritarian countries, will have the option to redact personally identifiable information.

Most obviously, the new tech is not in itself a way to stop fake images appearing on social media. That will continue to happen and probably always will. It is, however, a potentially valuable way to authenticate images from reliable sources, and restore public trust in the concept of responsible photojournalism.

So how can photographers get on board? At the moment, the technology is in closed beta testing, and so it's basically a matter of sitting back and waiting for it to become commercially available. However, the CAI suggests that if you are interested in the new tech, you subscribe to their mailing list to get news and updates about when these tools will be available (scroll to the bottom of the Contact page to find the subscription form).

Read more

Best cameras for professionals

Best photo editing software

World Press Photo Awards 2021

Tom May is a freelance writer and editor specializing in art, photography, design and travel. He has been editor of Professional Photography magazine, associate editor at Creative Bloq, and deputy editor at net magazine. He has also worked for a wide range of mainstream titles including The Sun, Radio Times, NME, T3, Heat, Company and Bella.