If you've been reading reviews on Digital Camera World lately, you might have noticed a pattern emerging. Take for example, Apple's latest and greatest phone to date, the iPhone 16 Pro Max. Our reviewer wrote: "The camera may have virtually identical hardware to its predecessor, but its pictures do look better thanks to updated processing."

Or how about the the iPhone's big Android rival, the Galaxy S24 Ultra? "For the most part, the camera hardware is identical to the S23 Ultra," our writer noted. "The main noticeable difference is that the image processing has been tweaked."

I could offer plenty more examples, but you get the point. In short, as we explained in our article on the best camera phones: "The hardware in many of this year's phones has received fairly incremental improvements compared to previous models. Instead, it's the computational side where the biggest photographic enhancements could be found."

So have we reached the limits of phone camera hardware? To answer that question, let's first look at how we got here…

The story so far

Camera phones are a pretty recent invention in the grand scheme of things. The very first was the J-SH04, introduced in Japan in 2001 by Sharp Corporation. It was pretty basic stuff, with a resolution of 0.1MP and no autofocus. And for the first few years, cameras were seen as gimmicky add-ons to phones that were still (imagine it!) mainly used for making calls.

The tech quickly improved, though. The first smartphone with a 1MP camera was the Nokia 7610, released in 2004. The first to hit 5MP was the Samsung SGH-G800 in October 2007. Just two year later, in 2009, the Samsung Pixon12 hit the 12MP mark. Woo-hoo!

For anyone upgrading their phones regularly, it's all felt like a wild ride. And it's one that's still continuing to this day. By the second of quarter of 2024, Counterpoint Research reported that the average primary camera resolution of smartphones had doubled from 27MP in Q2 2020 to a record high of 54MP.

Get the Digital Camera World Newsletter

The best camera deals, reviews, product advice, and unmissable photography news, direct to your inbox!

Overall, more than 50% of smartphones shipped during Q2 2024 featured cameras with at least 50MP resolution. And each leap in megapixels, up to the 108MP-200MP flagship models at the top end of the market, has felt like a game-changer in terms of picture quality.

Recently, though, that excitement has begun to fade a little, especially at the more affordable end of the price spectrum.

For instance, shoppers are noticing that jumping from, say, a 48MP to a 60MP phone, doesn't make much of a difference in picture quality. In some instances, it might even be worse. So what's going on?

Megapixels aren't everything

The confusion lies in the commonly held (but false) notion that a large number of megapixels is all you need for a great picture. It isn't. Photography is, as the saying goes "painting with light". So the really important thing is how much light reaches your camera. And that means the larger the lens, the better.

Conversely, if you jump up from 48MP to 60MP, but the phone has the same size lens, all you're really doing is dividing the same picture into a few million more pieces. (That's an oversimplification, but you get the idea.) In short, when you're shopping for a new phone these days, it's better to focus on senor size than megapixel count.

The good news is that many flagship phones are now building in larger sensors, with some models such as the Xiaomi 14 Ultra now featuring one-inch sensors, compared with the more typical size of around 0.5 inch. And others are following suit, with Sony projecting in its 2024 report that the average sensor size will increase by more than 200% from 2019 to 2030.

A natural limit

Unfortunately, there's only so far the industry can go here... because people like their smartphones to be small, light and portable. So you can only make the camera so big before the whole device becomes too bulky and cumbersome to bother with.

That said, there is some wriggle room. The iPhone 16 Pro Max, with its 6.9-inch display, for example is the biggest iPhone to date. Plus foldable tech gives you more space still, with the Pixel 9 Fold opening up to 8 inches in diameter.

But ultimately, there's a size limit before a phone becomes something so large, it ceases to be a phone, or even a phablet: it becomes something else entirely. So without going there, how else can smartphone makers improve their cameras?

Quality not size

Well, lens size is only one element; there's also the issue of lens quality. And at least in theory, there's no physical limit to making improvements here.

The most obvious point is that almost all modern smartphones use camera lens elements made of plastic materials instead of glass. There are very good reasons for this, in terms of cost and convenience. But switching to glass would be an obvious way to boost lens quality if you wanted to.

Apple has already started down this line in a small way, by introducing hybrid lenses made with a combination of glass and plastic from the iPhone 15 series onwards. The tetraprism lens used in the iPhone 15 Pro Max is a glass-plastic hybrid that's called 1G3P, because it includes one glass element to three plastic. It's likely that over time, Apple will find a way of upping the glass ratio and further improving the lens quality as a result.

Other areas in which smartphone manufacturers are working to boost quality include the following:

- Advanced lens coatings, such as the Zeiss’s T* coating featured on high-end models like the Xperia 1 V, can reduce reflections, improve light transmission and minimise flare and ghosting.

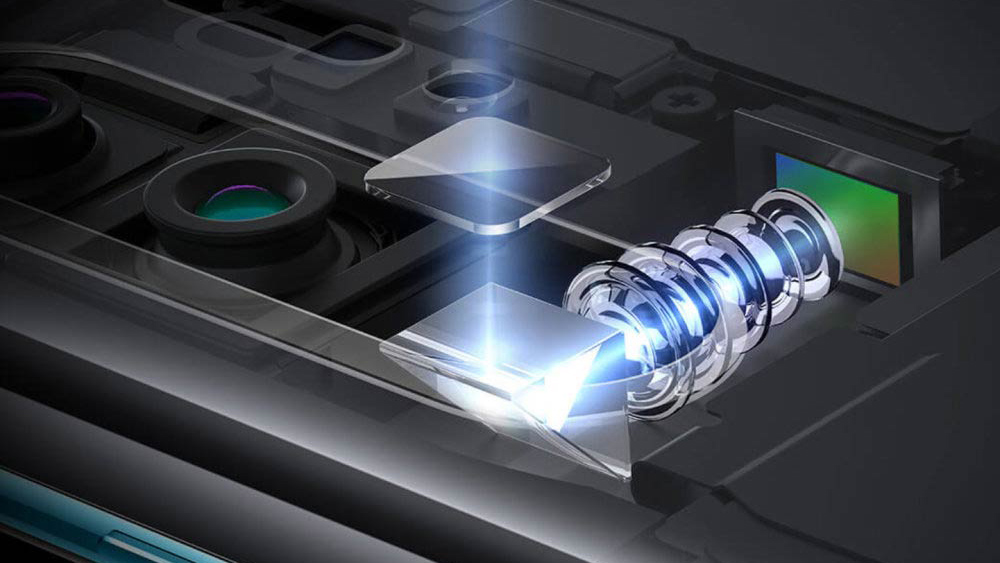

- Periscope lenses, found in phones such as the Samsung Galaxy S23 Ultra, use a prism to bend light at a 90-degree angle, allowing for longer focal lengths and higher optical zoom without needing to increase the phone's thickness. (Learn more in our articles What is a periscope lens? and Samsung wants to revolutionize telephoto camera modules.)

- Improvements to optical image stabilization systems have the potential to further reduce camera shake, especially in low light. Both Apple and Samsung have recently filed patents in this area.

Conclusion

In conclusion, we haven't reached the limits of phone camera hardware just yet. But at the same time, it feels like we are entering an era of diminishing returns.

Which is why right now, computational photography—leveraging algorithms to enhance sharpness, detail, and color—has emerged as the differentiator, making AI-driven processing just as important as hardware. Indeed, it's becoming so widespread that even mid-range devices are increasingly able to produce professional-grade images.

Yet reliance on computational methods isn’t without drawbacks: typically, these highly processed images look overly artificial. You can't, unfortunately, make a fabulous photo through software alone; you also need the underlying hardware to provide sufficient raw data. Otherwise, you're essentially asking the computer to make up the details based on its algorithmic imagination. That might look pretty, but it isn't photography.

So it's in all our interests that manufacturers' current production experiments bear fruit. That way, eventually, hardware and software can start moving together once more, and bring us into a shiny new future for smartphone photography. Here's hoping, anyway…

Tom May is a freelance writer and editor specializing in art, photography, design and travel. He has been editor of Professional Photography magazine, associate editor at Creative Bloq, and deputy editor at net magazine. He has also worked for a wide range of mainstream titles including The Sun, Radio Times, NME, T3, Heat, Company and Bella.